( – promoted by buhdydharma )

Slashdot has a quick blurb on a report in the TimesOnline. Micro$oft has just filed a very interesting patent:

Microsoft submitted a patent application in the US for a “unique monitoring system” that could link workers to their computers. Wireless sensors could read “heart rate, galvanic skin response, EMG, brain signals, respiration rate, body temperature, movement facial movements, facial expressions and blood pressure”, the application states.

Unique is right! Brain signals?! I wonder if they’re going to measure temperature rectally. I also wonder if the thing would know you hate it the minute you plug in. This is the kind of stuff they do with astronauts and pilots. I’m really glad there’s no diaper mentioned in the patent.

The Times continues:

The system could also “automatically detect frustration or stress in the user” and “offer and provide assistance accordingly”. Physical changes to an employee would be matched to an individual psychological profile based on a worker’s weight, age and health. If the system picked up an increase in heart rate or facial expressions suggestive of stress or frustration, it would tell management that he needed help.

Unions along with civil and privacy rights advocates are rightfully upset. Hugh Tomlinson, a data protection law expert at the suitably named Matrix Chambers tells The Times, “This system involves intrusion into every single aspect of the lives of the employees. It raises very serious privacy issues.” No shit! Do you doubt me know when I say that society and computers are merging? Maybe you just didn’t think it would be that much.

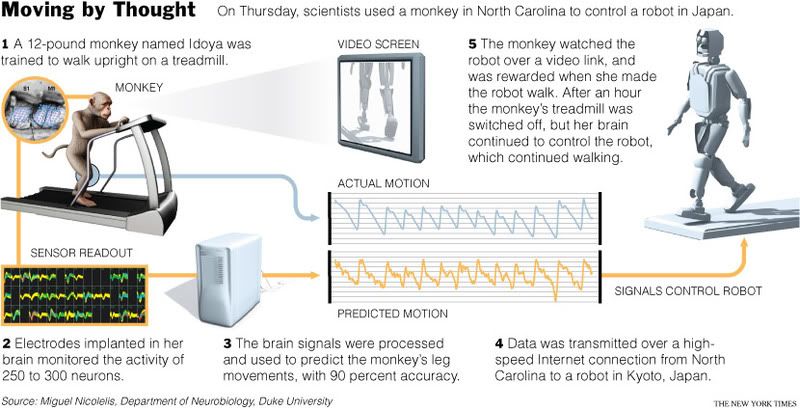

You might doubt that this stuff is achievable to any reasonable degree. In another blurb slashdot mentions a NYTimes article that Duke University has successfully used brain signals from a monkey to make a robot walk.

On Thursday, the 12-pound, 32-inch monkey made a 200-pound, 5-foot humanoid robot walk on a treadmill using only her brain activity.She was in North Carolina, and the robot was in Japan.

It was the first time that brain signals had been used to make a robot walk, said Dr. Miguel A. L. Nicolelis, a neuroscientist at Duke University whose laboratory designed and carried out the experiment.

The head of the team doing the research, Dr. Nicholelis says that these experiments “are the first steps toward a brain machine interface that might permit paralyzed people to walk by directing devices with their thoughts.”

Well, that’s comforting. At least something good may come of it. Imagine the field day hackers might have with the computer/brain interface of their friends and enemies, especially High School hackers.

The monkey can do more with it that just stomp up and down:

The robot, called CB for Computational Brain, has the same range of motion as a human. It can dance, squat, point and “feel” the ground with sensors embedded in its feet, and it will not fall over when shoved.

She even learned how to control the robots legs separately from her own.

When Idoya’s brain signals made the robot walk, some neurons in her brain controlled her own legs, whereas others controlled the robot’s legs. The latter set of neurons had basically become attuned to the robot’s legs after about an hour of practice and visual feedback.Idoya cannot talk but her brain signals revealed that after the treadmill stopped, she was able to make CB walk for three full minutes by attending to its legs and not her own.

The monkey has electrodes in it’s head and that is a bit touchy to humans. No problem. Functional MRI’s (fMRI) has the potential to lay bare the most intimate of cognitive processes. They are already being used in lie detection. An in depth article at Wired gives more details on the technology:

Functional magnetic resonance imaging – fMRI for short – enables researchers to create maps of the brain’s networks in action as they process thoughts, sensations, memories, and motor commands. Since its debut in experimental medicine 10 years ago, functional imaging has opened a window onto the cognitive operations behind such complex and subtle behavior as feeling transported by a piece of music or recognizing the face of a loved one in a crowd. As it migrates into clinical practice, fMRI is making it possible for neurologists to detect early signs of Alzheimer’s disease and other disorders, evaluate drug treatments, and pinpoint tissue housing critical abilities like speech before venturing into a patient’s brain with a scalpel.

It’s great for Big Brother as well!

Now fMRI is also poised to transform the security industry, the judicial system, and our fundamental notions of privacy. I’m in a lab at Columbia University, where scientists are using the technology to analyze the cognitive differences between truth and lies. By mapping the neural circuits behind deception, researchers are turning fMRI into a new kind of lie detector that’s more probing and accurate than the polygraph, the standard lie-detection tool employed by law enforcement and intelligence agencies for nearly a century.

I hate to quote so much, but I can’t say it any better, and you probably wouldn’t believe me:

For No Lie MRI founder Joel Huizenga, scanner-based lie detection represents a significant upgrade in “the arms race between truth-tellers and deceivers.”Both Laken and Huizenga play up the potential power of their technologies to exonerate the innocent and downplay the potential for aiding prosecution of the guilty. “What this is really all about is individuals who come forward willingly and pay their own money to declare that they’re telling the truth,” Huizenga says. (Neither company has set a price yet.) Still, No Lie MRI plans to market its services to law enforcement and immigration agencies, the military, counterintelligence groups, foreign governments, and even big companies that want to give prospective CEOs the ultimate vetting. “We’re really pushing the positive side of this,” Huizenga says. “But this is a company – we’re here to make money.”

What was all that stuff about un-torture like water boarding being necessary when you know they can have as many of theses as they want. I suppose pulling out fingernails is more fun. Remember this next time the torture debate comes up.

As usual, Privacy Advocates and Civil Liberties groups were deeply concerned about all this. These expensive and bulky machines will doubtlessly drop in price and size as time and market forces dictate. Imagine this becoming portable and maybe even ubiquitous. The incentive for large corporations and governments to misuse this are incredible. It’s inevitable that it will be used on people without their consent or even their knowledge. Do you have any idea how our legal system, let alone our Constitution will handle this? Fuck it, how will we handle it?!

Welcome to the Future!tm

14 comments

Skip to comment form

Author

the real scary stuff is on the last couple of pages.

Very soon they’ll have it figured out, if they haven’t already, so that they don’t need any electrodes to monitor what you’re thinking. That’s going to make “being off the grid” a whole lot more important to privacy.

is already ahead of me

omg this is some scarey shit…

i really don’t want ppl monitoring what i think…

Ray Kurzweil says everything will (probably) be OK.

http://www.amazon.com/Singular…

That we have such a benevolent and responsible government!

I offer high praise to all of our leaders, and have nothing but praise for them!

(yeah, just trying to kiss some ass BEFORE they start reading my mind to see what I really think!)

n/t